Research | Computer Vision

Odo: Depth-Guided Diffusion for Identity-Preserving Body Reshaping

August 14, 2025

Abstract

Human shape editing enables controllable transformation of a person’s body shape, such as thin, muscular, or overweight, while preserving pose, identity, clothing, and background. Unlike human pose editing, which has advanced rapidly, shape editing remains relatively underexplored. Current approaches typically rely on 3D morphable models or image warping, often introducing unrealistic body proportions, texture distortions, and background inconsistencies due to alignment errors and deformations. A key limitation is the lack of large-scale, publicly available datasets for training and evaluating body shape manipulation methods. In this work, we introduce the first large-scale dataset of 18,573 images across 1523 subjects, specifically designed for controlled human shape editing. It features diverse variations in body shape, including fat, muscular and thin, captured under consistent identity, clothing, and background conditions. Using this dataset, we propose Odo, an end-to-end diffusion-based method that enables realistic and intuitive body reshaping guided by simple semantic attributes. Our approach combines a frozen UNet that preserves fine-grained appearance and background details from the input image with a ControlNet that guides shape transformation using target SMPL depth maps. Extensive experiments demonstrate that our method outperforms prior approaches, achieving per-vertex reconstruction errors as low as 7.5mm, significantly lower than the 13.6mm observed in baseline methods, while producing realistic results that accurately match the desired target shapes.

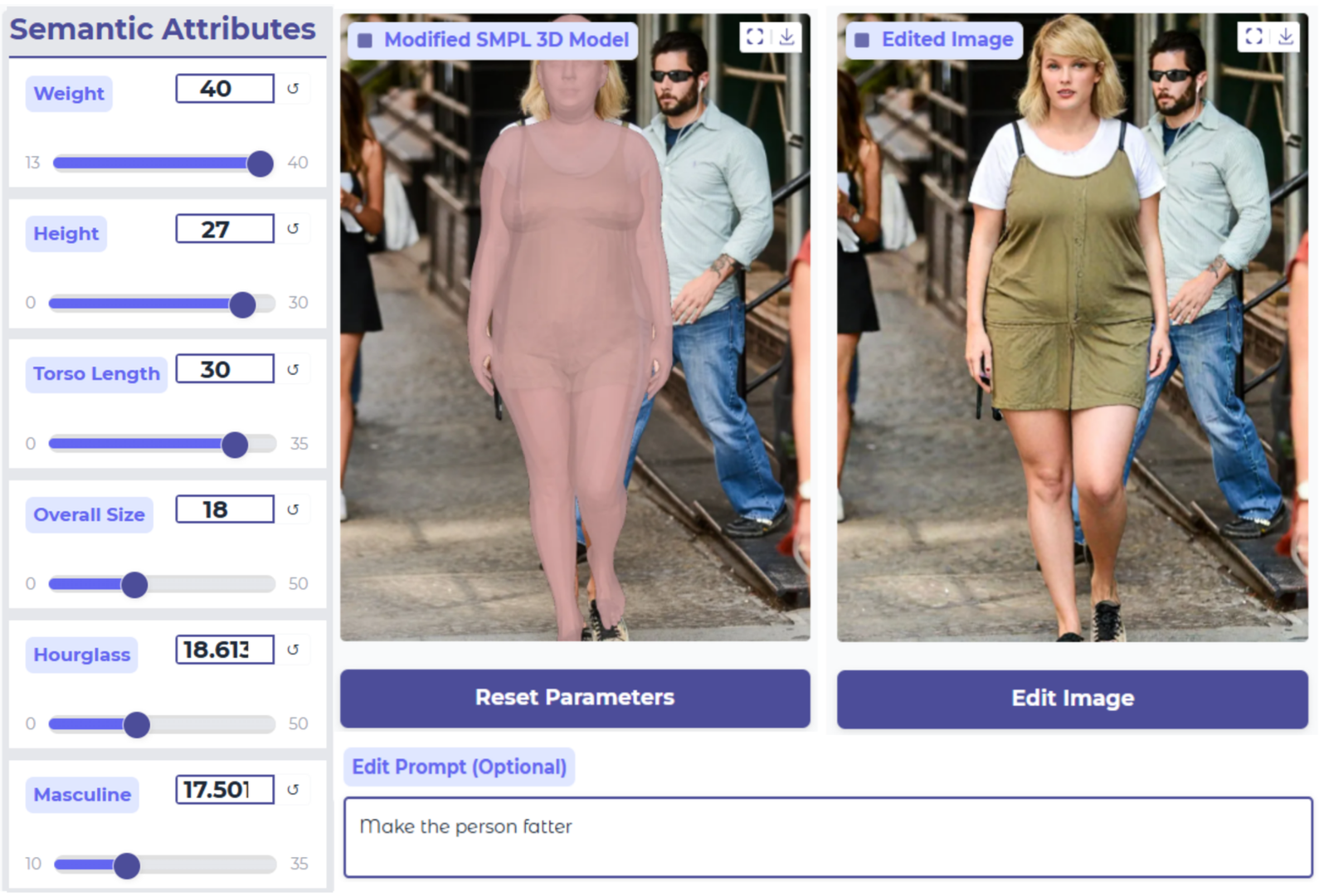

Interactive Demo

Toggle a shape, then adjust intensity

ChangeLing18K Dataset

We introduce ChangeLing18K, the first large-scale dataset specifically curated for human shape editing. It features diverse body types including thin, muscular, overweight, with each pair depicting the same subject across different shapes while maintaining consistent identity, pose, clothing, and viewpoint.

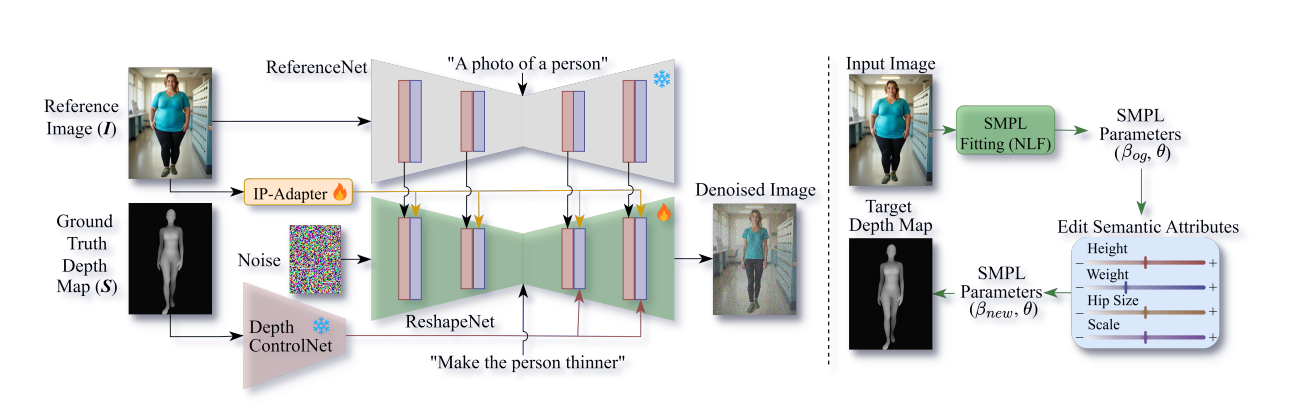

Method Overview

The left figure illustrates our training pipeline, where the SMPL depth-map extracted from the target image conditions the ReshapeNet via ControlNet for the desired shape transformation. Features from the reference image, extracted by the ReferenceNet, are integrated into the ReshapeNet using spatial self-attention. The right figure depicts the inference pipeline, where SMPL parameters are initialized from the input image, adjusted based on semantic attributes, and rendered into a target SMPL depth map. This depth map, along with input image features, conditions the ReshapeNet during inference to generate the final transformed output through iterative denoising.

Paper

“Odo: Depth-Guided Diffusion for Identity-Preserving Body Reshaping”,

Siddharth Khandelwal, Sridhar Kamath, and Arjun Jain

arXiv preprint 2025

Resources

Dataset Download

Coming soon

GitHub Code

Coming soon

BibTeX

@misc{khandelwal2025ododepthguideddiffusionidentitypreserving,

title={Odo: Depth-Guided Diffusion for Identity-Preserving Body Reshaping},

author={Siddharth Khandelwal and Sridhar Kamath and Arjun Jain},

year={2025},

eprint={2508.13065},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2508.13065},

}Ethics Statement

This research investigates generative models for body shape transformation in human images. We acknowledge the ethical sensitivities of this work.

Consent & Privacy: All images are from publicly available, research-approved datasets. The model is not trained on or intended for private or non-consensual images.

Responsible Use: This is a research prototype not intended for commercial or clinical use. We do not endorse its use for body shaming, promoting unrealistic body standards, or deceptive manipulation.

Transparency: AI-modified outputs must be disclosed as such.

Research Integrity: Our goal is advancing scientific understanding of conditional generative modeling, not creating tools for harmful or exploitative use.

Disclaimer: This project is for academic research only. Generated images should not be used in real-world applications without careful ethical consideration and explicit consent.